onyx.am.gaussian – Simple Gaussian models and mixtures

onyx.am.bwtrainer – Baum-Welch trainer for HMMs - see class UttLattice

Enter search terms or a module, class or function name.

This module provides the Hmm class, which supports modeling with hidden Markov models.

Bases: onyx.util.state_machine.ConfigurationStateMachineMixin

A five-configuration, hypothesis-recognizing HMM used by decoders.

The hmm must be an instance of Hmm that contains the static modeling information of the HMM.

Optional user_data is an arbitrary client-provided object that is available through the user_data attribute of the instance, but is otherwise not used.

The returned instance will be in its reset configuration.

In typical usage, an HMM is cycled through the following four methods and their resulting state-machine configurations:

Method prune_states() can be called while in any of the above configurations in order to deactivate states and their hypotheses. On a given cycle, method get_lost_activations() returns a tuple of the activations that have been abandoned during that cycle because of network combining by pass_in(), modeling by apply_likelihoods(), or by explicit pruning by prune_states() or prune_all().

The scores and activation IDs returned by get_sentential_hypotheses() represent conditional likelihoods for the best path to each such HMM state that constitues a recognition. The scores returned by get_output() include network transitions that prepare hypotheses for moving into successor HMMs. The distinction between the scores from get_sentential_hypotheses() and get_output() mirrors the gramatical distinction between the cost associated with reaching a sentential grammar handle and the additional known cost associated with starting a successor terminal.

Applies the HMM model’s emission likelihood scores for observation to the HMM states.

Places the instance in its likelihood configuration. This method can only be called when in configuration pass_in.

Verify that the current configuration is one of configs.

Raises ValueError if the current configuration is not in configs.

The current configuration of the state machine.

Returns a tuple of the activation_ids that have been deactivated during the current cycle. Activation IDs can only be introduced into the HMM by input().

For each configuration cycle, the initial set of active IDs is determined when input() is called, or at the start of pass_in() if input() is not called. IDs are removed by calls to methods pass_in(), apply_likelihoods(), prune_states(), and prune_all().

This method can be called from any configuration except reset.

Return a numpy array of length num_outputs of output scores suitable for use in a call to a successor instance’s input() method.

The array will be degenerate (all zero scores) if has_sentential_hypotheses() is False. XXX should such usage be an error?

It is an error to call this method unless the HMM is in configuration output.

Return a pair of parallel numpy arrays, (scores, activation_ids), of the scores and activation_ids of the hypotheses that the HMM has recognized. See also has_sentential_hypotheses().

The arrays will be degenerate (empty) if has_sentential_hypotheses() is False. XXX should such usage be an error?

It is an error to call this method unless the HMM is in configuration likelihood or output.

Return True if the HMM has hypotheses that constitute a successful recognition of what it is that the HMM models, False otherwise.

It is an error to call this method unless the HMM is in configuration likelihood or output.

The Hmm with the static modeling information used by the instances.

This attribute is available from any configuration.

Puts scores with activation_id into the virtual input states of the HMM. The scores must be an iterable that yields num_inputs activation scores of the hypotheses entering the HMMs input states. In the HMM each such hypothesis is tagged with the client’s activation_id token.

Places the instance in its input configuration. This method can be called when in configuration reset, likelihood, or output.

Number of input states. See also input().

This attribute is available from any configuration.

Number of output states. See also get_output().

This attribute is available from any configuration.

Moves and combines hypotheses from the input states and HMM states according to the columns of the transition matrix that target the HMM states. This method can cause hypotheses to be deactivated.

Places the instance in its pass_in configuration. This method can be called when in configuration reset, input, likelihood, or output.

Moves and combines hypothesis scores from the HMM states according to the columns of the transition matrix that target the virtual output states.

Places the instance in its output configuration. This method can be called when in configuration likelihood.

This operation is an unnecessary waste of time if has_sentential_hypotheses() is False. XXX should such usage be an error?

Deactivate all input and HMM states. This is shorthand for calling prune_states() with threshold=numpy.inf.

This method can be called from any configuration.

Deactivate input and HMM states with scores that are below threshold.

This method can be called from any configuration.

Places the instance in its reset configuration. This method can be called from any configuration.

Switch the state machine to configuration. Returns the previous configuration.

Raises ValueError if the transition isn’t valid.

Client-provided information about the instance.

This attribute is available from any configuration.

Bases: object

Four factory functions support Hmm building: build_forward_hmm_compact() and build_forward_hmm_exact() construct forward models with arbitrary skipping; build_hmm() supports arbitrary topologies; and build_epsilon_hmm() support building Hmms with only virtual inputs and outputs.

>>> num_states = 3

>>> hmm0_log = Hmm(num_states, log_domain=True)

>>> dummies = ( DummyModel(2, 0.1), DummyModel(2, 0.2), DummyModel(2, 0.4), DummyModel(2, 0.4) )

>>> mm = GmmMgr(dummies)

>>> models = range(num_states)

>>> hmm1 = build_forward_hmm_compact(num_states, mm, models, 2, ((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)))

>>> hmm2 = build_forward_hmm_compact(num_states, mm, models, 2, ((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)))

>>> hmm1 == hmm2

True

An Hmm is skippable if you can get from a virtual input to a virtual output without going through any real nodes.

>>> hmm1.is_skippable

False

>>> with DebugPrint("hmm_bfm") if False else DebugPrint():

... np.set_printoptions(linewidth=200)

... hmm1state = build_forward_hmm_exact(1, mm, range(1), 5, ((0.5,), (0.125,), (0.125,), (0.125,), (0.125,)))

... hmm2state = build_forward_hmm_exact(2, mm, range(2), 5, ((0.5, 0.5), (0.125, 0.125), (0.125, 0.125), (0.125, 0.125), (0.125, 0.125)))

... hmm3state = build_forward_hmm_exact(3, mm, range(3), 5, ((0.5, 0.5, 0.5), (0.125, 0.125, 0.125), (0.125, 0.125, 0.125), (0.125, 0.125, 0.125), (0.125, 0.125, 0.125)))

... hmm4state = build_forward_hmm_exact(4, mm, range(4), 5, ((0.5, 0.5, 0.5, 0.5), (0.125, 0.125, 0.125, 0.125), (0.125, 0.125, 0.125, 0.125), (0.125, 0.125, 0.125, 0.125), (0.125, 0.125, 0.125, 0.125)))

>>> # hmm1state.dot_display()

>>> # hmm2state.dot_display()

>>> # hmm3state.dot_display()

>>> # hmm4state.dot_display()

>>> hmm1state.is_skippable == hmm2state.is_skippable == hmm3state.is_skippable == True

True

>>> hmm4state.is_skippable

False

>>> hmm1_log = build_forward_hmm_compact(num_states, mm, models, 2, ((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)), log_domain=True)

>>> mm.set_adaptation_state("INITIALIZING")

>>> mm.clear_all_accumulators()

>>> hmm1.begin_adapt("STANDALONE")

>>> hmm1_log.begin_adapt("STANDALONE")

>>> mm.set_adaptation_state("ACCUMULATING")

>>> obs = tuple(repeat(np.array((0,0)), 12))

>>> hmm1.adapt_one_sequence(obs)

>>> hmm1_log.adapt_one_sequence(obs)

>>> mm.set_adaptation_state("APPLYING")

>>> hmm1.end_adapt()

>>> hmm1_log.end_adapt()

>>> mm.set_adaptation_state("NOT_ADAPTING")

>>> print hmm1.to_string(full=True)

Hmm: num_states = 3, log_domain = False

Models (dim = 2):

GmmMgr index list: [0, 1, 2]

DummyModel (Dim = 2) always returning a score of 0.1

DummyModel (Dim = 2) always returning a score of 0.2

DummyModel (Dim = 2) always returning a score of 0.4

Transition probabilities:

[[ 0. 1. 0. 0. 0. ]

[ 0. 0.2494527 0.7505473 0. 0. ]

[ 0. 0. 0.49667697 0.50332303 0. ]

[ 0. 0. 0. 0.88480382 0.11519618]

[ 0. 0. 0. 0. 0. ]]

>>> print hmm1_log.to_string(full=True)

Hmm: num_states = 3, log_domain = True

Models (dim = 2):

GmmMgr index list: [0, 1, 2]

DummyModel (Dim = 2) always returning a score of 0.1

DummyModel (Dim = 2) always returning a score of 0.2

DummyModel (Dim = 2) always returning a score of 0.4

Transition probabilities:

[[ 0. 1. 0. 0. 0. ]

[ 0. 0.2494527 0.7505473 0. 0. ]

[ 0. 0. 0.49667697 0.50332303 0. ]

[ 0. 0. 0. 0.88480382 0.11519618]

[ 0. 0. 0. 0. 0. ]]

As might be expected, log-domain and probability-domain processing don’t give exactly the same results, but they’re very close in this simple example:

>>> hmm1 == hmm1_log

False

>>> np.allclose(hmm1.transition_matrix, hmm1_log.transition_matrix)

True

>>> durs = hmm1.find_expected_durations(12)

>>> print "Expected durations = %s" % (durs,)

Expected durations = [ 1.33236099 1.98543653 5.62090219 3.06130028]

>>> hmm1 == hmm2

False

Forward models have to have at least order 2.

>>> models = range(4)

>>> fail = build_forward_hmm_compact(4, mm, models, 1, (tuple(xrange(10)), ))

Traceback (most recent call last):

...

ValueError: expected transition order of at least 2, but got 1

>>> fail = build_forward_hmm_compact(num_states, mm, models, 2, ((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)), log_domain=True)

Traceback (most recent call last):

...

ValueError: expected 3 models, but got 4

>>> models = range(3)

=================== NETWORK ADAPTATION INTERFACE =========================

Here’s a very simple example of using the network adaptation interface. In this case, the network consists of only one Hmm. Begin by getting the GmmMgr into the right state:

>>> mm.set_adaptation_state("INITIALIZING")

>>> mm.clear_all_accumulators()

Now get the Hmm into network adaptation mode:

>>> hmm2.begin_adapt("NETWORK")

Get the GmmMgr into the accumulation state:

>>> mm.set_adaptation_state("ACCUMULATING")

Some trivial observations sice we’re using dummy models:

>>> num_obs = 12

>>> obs = tuple(repeat(np.array((0,0)), num_obs))

Set up for a forward pass. The second argument says whether this Hmm is at the end of the network. In this case we have only one Hmm, so it is a terminal:

>>> context = hmm2.init_for_forward_pass(obs, terminal=True)

Add some mass into the system for the forward pass. To match the behavior of standalone adaptation, we divide an initial mass of 1 evenly across the inputs

>>> hmm2.accum_input_alphas(context, np.array(tuple(repeat(1.0/hmm2.num_inputs,

... hmm2.num_inputs))))

Actually do the forward pass. Note that we must process one more frame than the number of observations - this is because an extra frame is automatically added which scores 1 on the exit states of the Hmm (and 0 on all real states). XXX we might want clients to do this for themselves at some point rather than this automatic behavior:

>>> for frame in xrange(num_obs + 1):

... output_alphas = hmm2.process_one_frame_forward(context)

In this case, these will be None

>>> output_alphas is None

True

Likewise, we initialize and then make the backward pass:

>>> hmm2.init_for_backward_pass(context)

>>> hmm2.accum_input_betas(context, np.array(tuple(repeat(1.0, hmm2.num_outputs))))

>>> for frame in xrange(num_obs + 1):

... output_betas = hmm2.process_one_frame_backward(context)

>>> output_betas

array([ 6.80667969e-09])

Now collect all the gamma sums; here there’s only one:

>>> gamma_sum = hmm2.get_initial_gamma_sum()

>>> hmm2.add_to_gamma_sum(gamma_sum, context)

>>> np.set_printoptions(linewidth=75)

>>> gamma_sum.value

array([ 1.36333398e-09, 1.36333398e-09, 1.36333398e-09,

1.36333398e-09, 1.36333398e-09, 1.36333398e-09,

1.36333398e-09, 1.36333398e-09, 1.36333398e-09,

1.36333398e-09, 1.36333398e-09, 1.36333398e-09,

1.36333398e-09])

Here’s where the actual accumulation happens:

>>> hmm2.do_accumulation(context, gamma_sum)

Finally, get the GmmMgr into the correct state and apply the accumulators to actually adapt the model. Because we’re using dummy models, only the transition probabilities change in this example:

>>> mm.set_adaptation_state("APPLYING")

>>> hmm2.end_adapt()

>>> mm.apply_all_accumulators()

>>> print hmm2.to_string(full=True)

Hmm: num_states = 3, log_domain = False

Models (dim = 2):

GmmMgr index list: [0, 1, 2]

DummyModel (Dim = 2) always returning a score of 0.1

DummyModel (Dim = 2) always returning a score of 0.2

DummyModel (Dim = 2) always returning a score of 0.4

Transition probabilities:

[[ 0. 1. 0. 0. 0. ]

[ 0. 0.2494527 0.7505473 0. 0. ]

[ 0. 0. 0.49667697 0.50332303 0. ]

[ 0. 0. 0. 0.88480382 0.11519618]

[ 0. 0. 0. 0. 0. ]]

>>> durs = hmm1.find_expected_durations(12, verbose=True)

Occupancy probs after step 0: [ 1. 0. 0. 0.] (sum = 1.0)

Occupancy probs after step 1: [ 0.2494527 0.7505473 0. 0. ] (sum = 1.0)

Occupancy probs after step 2: [ 0.06222665 0.56000561 0.37776774 0. ] (sum = 1.0)

Occupancy probs after step 3: [ 0.01552261 0.32484593 0.61611406 0.0435174 ] (sum = 1.0)

Occupancy probs after step 4: [ 0.00387216 0.17299394 0.70864251 0.11449139] (sum = 1.0)

Occupancy probs after step 5: [ 0.00096592 0.08882834 0.71408144 0.1961243 ] (sum = 1.0)

Occupancy probs after step 6: [ 2.40951302e-04 4.48439616e-02 6.76531333e-01 2.78383754e-01] (sum = 1.0)

Occupancy probs after step 7: [ 6.01059535e-05 2.24538084e-02 6.21168505e-01 3.56317580e-01] (sum = 1.0)

Occupancy probs after step 8: [ 1.49935925e-05 1.11974019e-02 5.60913784e-01 4.27873820e-01] (sum = 1.0)

Occupancy probs after step 9: [ 3.74019217e-06 5.57274505e-03 5.01934568e-01 4.92488947e-01] (sum = 1.0)

Occupancy probs after step 10: [ 9.33001044e-07 2.77066132e-03 4.46918514e-01 5.50309892e-01] (sum = 1.0)

Occupancy probs after step 11: [ 2.32739632e-07 1.37682393e-03 3.96829745e-01 6.01793198e-01] (sum = 1.0)

>>> print "Expected durations = %s" % (durs,)

Expected durations = [ 1.33236099 1.98543653 5.62090219 3.06130028]

>>> with DebugPrint('hmm_fva'):

... seg, scores, trans = hmm1.find_viterbi_alignment(obs, start_state=0)

hmm_fva: Occupancy likelihoods after step 0: [ 0.1 0. 0. ]

hmm_fva: Traceback: [-1 0 -1 -1 -1]

hmm_fva: Occupancy likelihoods after step 1: [ 0.00249453 0.01501095 0. ]

hmm_fva: Traceback: [-1 1 1 -1 -1]

hmm_fva: Occupancy likelihoods after step 2: [ 6.22266467e-05 1.49111818e-03 3.02214175e-03]

hmm_fva: Traceback: [-1 1 2 2 -1]

hmm_fva: Occupancy likelihoods after step 3: [ 1.55226044e-06 1.48120808e-04 1.06960102e-03]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 4: [ 3.87215550e-08 1.47136387e-05 3.78554827e-04]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 5: [ 9.65919611e-10 1.46158509e-06 1.33978698e-04]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 6: [ 2.40951245e-11 1.45187130e-07 4.74179440e-05]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 7: [ 6.01059372e-13 1.44222206e-08 1.67822305e-05]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 8: [ 1.49935884e-14 1.43263694e-09 5.93959248e-06]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 9: [ 3.74019108e-16 1.42311551e-10 2.10214957e-06]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 10: [ 9.33000770e-18 1.41365739e-11 7.43995975e-07]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 11: [ 2.32739559e-19 1.40426212e-12 2.63316190e-07]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 12: [ 0.00000000e+00 5.80575098e-20 6.97464655e-13 2.32983169e-07

3.03330179e-08]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Most likely output state: 4

>>> seg

array([0, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 3])

>>> scores

array([ 0.1, 0.2, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4,

0.4, 0. ])

>>> trans

array([ 1. , 0.7505473 , 0.50332303, 0.88480382, 0.88480382,

0.88480382, 0.88480382, 0.88480382, 0.88480382, 0.88480382,

0.88480382, 0.88480382, 0.11519618])

Using normalize=True keeps likelihoods closer to 1, helping prevent underflow.

>>> with DebugPrint('hmm_fva'):

... seg, scores, trans1 = hmm1.find_viterbi_alignment(obs, normalize=True)

hmm_fva: Applying normalization 1.0 (log2_norm = 0)

hmm_fva: Occupancy likelihoods after step 0: [ 0.1 0. 0. ]

hmm_fva: Traceback: [-1 0 -1 -1 -1]

hmm_fva: Applying normalization 16.0 (log2_norm = 4)

hmm_fva: Occupancy likelihoods after step 1: [ 0.03991243 0.24017513 0. ]

hmm_fva: Traceback: [-1 1 1 -1 -1]

hmm_fva: Applying normalization 16.0 (log2_norm = 4)

hmm_fva: Occupancy likelihoods after step 2: [ 0.01593002 0.38172625 0.77366829]

hmm_fva: Traceback: [-1 1 2 2 -1]

hmm_fva: Applying normalization 2.0 (log2_norm = 1)

hmm_fva: Occupancy likelihoods after step 3: [ 0.00079476 0.07583785 0.54763572]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Applying normalization 4.0 (log2_norm = 2)

hmm_fva: Occupancy likelihoods after step 4: [ 7.93017447e-05 3.01335320e-02 7.75280285e-01]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Applying normalization 2.0 (log2_norm = 1)

hmm_fva: Occupancy likelihoods after step 5: [ 3.95640673e-06 5.98665252e-03 5.48776746e-01]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Applying normalization 4.0 (log2_norm = 2)

hmm_fva: Occupancy likelihoods after step 6: [ 3.94774520e-07 2.37874594e-03 7.76895595e-01]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Applying normalization 2.0 (log2_norm = 1)

hmm_fva: Occupancy likelihoods after step 7: [ 1.96955135e-08 4.72587324e-04 5.49920130e-01]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Applying normalization 4.0 (log2_norm = 2)

hmm_fva: Occupancy likelihoods after step 8: [ 1.96523962e-09 1.87778589e-04 7.78514266e-01]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Applying normalization 2.0 (log2_norm = 1)

hmm_fva: Occupancy likelihoods after step 9: [ 9.80468651e-11 3.73061193e-05 5.51065898e-01]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Applying normalization 4.0 (log2_norm = 2)

hmm_fva: Occupancy likelihoods after step 10: [ 9.78322215e-12 1.48232721e-05 7.80136323e-01]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Applying normalization 2.0 (log2_norm = 1)

hmm_fva: Occupancy likelihoods after step 11: [ 4.88090232e-13 2.94495112e-06 5.52214074e-01]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 12: [ 0.00000000e+00 1.21755423e-13 1.46268940e-06 4.88601118e-01

6.36129491e-02]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Most likely output state: 4

>>> seg

array([0, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 3])

>>> scores

array([ 0.1, 0.2, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4,

0.4, 0. ])

>>> trans1[0] = 0

>>> (trans1[1:] == trans[1:]).all()

True

Log-domain behavior is the same as prob-domain WRT best states for each frame and score, but transition probs may vary slightly.

>>> with DebugPrint('hmm_fva'):

... seg, scores, trans2 = hmm1_log.find_viterbi_alignment(obs, start_state=0)

hmm_fva: Occupancy likelihoods after step 0: [ 0.1 0. 0. ]

hmm_fva: Traceback: [-1 0 -1 -1 -1]

hmm_fva: Occupancy likelihoods after step 1: [ 0.00249453 0.01501095 0. ]

hmm_fva: Traceback: [-1 1 1 -1 -1]

hmm_fva: Occupancy likelihoods after step 2: [ 6.22266467e-05 1.49111818e-03 3.02214175e-03]

hmm_fva: Traceback: [-1 1 2 2 -1]

hmm_fva: Occupancy likelihoods after step 3: [ 1.55226044e-06 1.48120808e-04 1.06960102e-03]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 4: [ 3.87215550e-08 1.47136387e-05 3.78554827e-04]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 5: [ 9.65919611e-10 1.46158509e-06 1.33978698e-04]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 6: [ 2.40951245e-11 1.45187130e-07 4.74179440e-05]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 7: [ 6.01059372e-13 1.44222206e-08 1.67822305e-05]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 8: [ 1.49935884e-14 1.43263694e-09 5.93959248e-06]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 9: [ 3.74019108e-16 1.42311551e-10 2.10214957e-06]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 10: [ 9.33000770e-18 1.41365739e-11 7.43995975e-07]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 11: [ 2.32739559e-19 1.40426212e-12 2.63316190e-07]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Occupancy likelihoods after step 12: [ 0.00000000e+00 5.80575098e-20 6.97464655e-13 2.32983169e-07

3.03330179e-08]

hmm_fva: Traceback: [-1 1 2 3 3]

hmm_fva: Most likely output state: 4

>>> seg

array([0, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 3])

>>> scores

array([ 0.1, 0.2, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4, 0.4,

0.4, 0. ])

>>> np.allclose(trans2, trans)

True

============================= Decode Instance Interface ======================

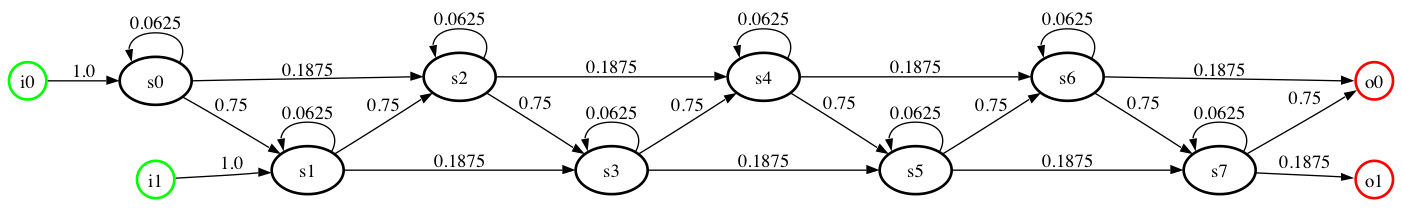

Create an Hmm with single-state skipping and observation likelihoods of one so that no probability leaks out

>>> dummies = tuple(repeat(DummyModel(2, 1.0), 8))

>>> mm = GmmMgr(dummies)

>>> models = range(8)

>>> fs = build_forward_hmm_compact(8, mm, models, 3, ((0.0625,) * 8, (0.75,) * 8, (0.1875,) * 8))

>>> fs.num_states

8

Here’s what this HMM looks like (see dot_display())

>>> print fs.to_string(full=True)

Hmm: num_states = 8, log_domain = False

Models (dim = 2):

GmmMgr index list: [0, 1, 2, 3, 4, 5, 6, 7]

DummyModel (Dim = 2) always returning a score of 1.0

DummyModel (Dim = 2) always returning a score of 1.0

DummyModel (Dim = 2) always returning a score of 1.0

DummyModel (Dim = 2) always returning a score of 1.0

DummyModel (Dim = 2) always returning a score of 1.0

DummyModel (Dim = 2) always returning a score of 1.0

DummyModel (Dim = 2) always returning a score of 1.0

DummyModel (Dim = 2) always returning a score of 1.0

Transition probabilities:

[[ 0. 0. 1. 0. 0. 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 1. 0. 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0.0625 0.75 0.1875 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0.0625 0.75 0.1875 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0.0625 0.75 0.1875 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0.0625 0.75 0.1875 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0.0625 0.75 0.1875

0. 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0. 0.0625 0.75

0.1875 0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0.0625

0.75 0.1875 0. ]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0.

0.0625 0.75 0.1875]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. ]

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0.

0. 0. ]]

Get a decoding instance

>>> fsc = fs.get_decode_instance('A')

>>> fsc.hmm is fs

True

>>> fsc.user_data == 'A'

True

Inject some mass. Note total injected mass sums to one

>>> scores = np.array((0.25, 0.75))

>>> ids = np.array((25, 75))

>>> fsc.activate(scores, ids)

>>> print fsc.token_string

I0 I1 | S0 S1 S2 S3 S4 S5 S6 S7 | O0 O1

0.250000 0.750000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000

25 75 | -1 -1 -1 -1 -1 -1 -1 -1 | -1 -1

input_activation_ids_seen: set([25, 75])

output_activation_ids_seen: set([])

current_activation_ids: set([25, 75])

lost_activation_ids: set([])

We can pass tokens and score five times total without any mass leaking out of the right-hand side because that’s how long it takes for the mass to get to the (virtual) output states.

Illustrate use of frame-level alignment diagnostic. Turn on hmm.HmmDecodeInstance.per_frame_scores, giving it control data in the form of a pair (frame_set, callback). The callback is called for the instance on which pass_tokens() is called with the instance as an argument. If the callback returns any non-None value, the alignment details for that instance on that frame are printed, along with the callback’s return value.

>>> def pfa_callback(instance): return 'doctest per_frame_alignment return value'

>>> control = (set(xrange(20)), pfa_callback)

>>> with DebugPrint(('hmm.HmmDecodeInstance.per_frame_alignment', control)):

... fsc.pass_tokens(frame_index=0)

hmm.HmmDecodeInstance.per_frame_alignment: frame, return = (0, doctest per_frame_alignment return value)

hmm.HmmDecodeInstance.per_frame_alignment: traceback = array([-1, -1, 0, 1, -1, -1, -1, -1, -1, -1, -1, -1])

>>> print fsc.token_string

I0 I1 | S0 S1 S2 S3 S4 S5 S6 S7 | O0 O1

0.000000 0.000000 | 0.250000 0.750000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000

-1 -1 | 25 75 -1 -1 -1 -1 -1 -1 | -1 -1

input_activation_ids_seen: set([25, 75])

output_activation_ids_seen: set([])

current_activation_ids: set([25, 75])

lost_activation_ids: set([])

>>> fsc.has_output and fsc.output_tokens

False

>>> dummy_obs = (0,0)

>>> fsc.apply_likelihoods(dummy_obs)

>>> fsc.internal_scores.sum()

1.0

>>> print fsc.token_string

I0 I1 | S0 S1 S2 S3 S4 S5 S6 S7 | O0 O1

0.000000 0.000000 | 0.250000 0.750000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000

-1 -1 | 25 75 -1 -1 -1 -1 -1 -1 | -1 -1

input_activation_ids_seen: set([25, 75])

output_activation_ids_seen: set([])

current_activation_ids: set([25, 75])

lost_activation_ids: set([])

>>> with DebugPrint(('hmm.HmmDecodeInstance.per_frame_alignment', control)):

... with SUMMED_COMBINATION():

... for i in xrange(1,4): fsc.pass_tokens(frame_index=i); fsc.apply_likelihoods(dummy_obs)

hmm.HmmDecodeInstance.per_frame_alignment: frame, return = (1, doctest per_frame_alignment return value)

hmm.HmmDecodeInstance.per_frame_alignment: traceback = array([-1, -1, 2, 2, 3, 3, -1, -1, -1, -1, -1, -1])

hmm.HmmDecodeInstance.per_frame_alignment: frame, return = (2, doctest per_frame_alignment return value)

hmm.HmmDecodeInstance.per_frame_alignment: traceback = array([-1, -1, 2, 3, 3, 4, 4, 5, -1, -1, -1, -1])

hmm.HmmDecodeInstance.per_frame_alignment: frame, return = (3, doctest per_frame_alignment return value)

hmm.HmmDecodeInstance.per_frame_alignment: traceback = array([-1, -1, 2, 3, 3, 4, 5, 6, 6, 7, -1, -1])

>>> fsc.internal_scores.sum()

1.0

>>> print fsc.token_string

I0 I1 | S0 S1 S2 S3 S4 S5 S6 S7 | O0 O1

0.000000 0.000000 | 0.000061 0.002380 0.033508 0.199402 0.436707 0.262024 0.060974 0.004944 | 0.000000 0.000000

-1 -1 | 25 25 25 25 75 75 75 75 | -1 -1

input_activation_ids_seen: set([25, 75])

output_activation_ids_seen: set([])

current_activation_ids: set([25, 75])

lost_activation_ids: set([])

And here’s the non-zero output

>>> with SUMMED_COMBINATION():

... fsc.pass_tokens()

>>> fsc.has_output and fsc.output_tokens

(array([ 0.01514053, 0.00092697]), array([75, 75]))

One more set of likelihoods and we’re dropping the output mass on the floor

>>> fsc.apply_likelihoods(dummy_obs)

>>> fsc.internal_scores.sum() < 1

True

>>> print fsc.token_string

I0 I1 | S0 S1 S2 S3 S4 S5 S6 S7 | O0 O1

0.000000 0.000000 | 0.000004 0.000195 0.003891 0.038040 0.183128 0.381294 0.282211 0.095169 | 0.015141 0.000927

-1 -1 | 25 25 25 25 25 75 75 75 | 75 75

input_activation_ids_seen: set([25, 75])

output_activation_ids_seen: set([75])

current_activation_ids: set([25, 75])

lost_activation_ids: set([])

>>> with DebugPrint(('hmm.HmmDecodeInstance.per_frame_alignment', control)):

... fsc.pass_tokens(frame_index=5)

hmm.HmmDecodeInstance.per_frame_alignment: frame, return = (5, doctest per_frame_alignment return value)

hmm.HmmDecodeInstance.per_frame_alignment: traceback = array([-1, -1, 2, 3, 4, 4, 5, 6, 7, 8, 9, 9])

All feasible alignments in this HMM will have the same score since the transition structure is uniform. Our tie-breaking favors staying in the initial state for as long as possible.

>>> seg, scores, trans = fs.find_viterbi_alignment([dummy_obs] * 10)

>>> seg

array([0, 0, 0, 1, 2, 3, 4, 5, 6, 7, 8])

>>> trans

array([ 0. , 0.0625, 0.0625, 0.75 , 0.75 , 0.75 , 0.75 ,

0.75 , 0.75 , 0.75 , 0.75 ])

>>> num_states = 1

>>> out_order = 2

>>> dummies = [DummyModel(2, 1.0)] * num_states

>>> mm = GmmMgr(dummies)

>>> models = range(num_states)

>>> fs = build_forward_hmm_compact(num_states, mm, models, 2, tuple([p] * num_states for p in toy_probs(out_order)))

>>> fsc = fs.get_decode_instance()

>>> fsc.activate(np.array(toy_probs(out_order - 1)), np.arange(out_order - 1))

>>> print fsc.token_string

I0 | S0 | O0

1.000000 | 0.000000 | 0.000000

0 | -1 | -1

input_activation_ids_seen: set([0])

output_activation_ids_seen: set([])

current_activation_ids: set([0])

lost_activation_ids: set([])

>>> fsc.pass_tokens()

>>> fsc.traceback

array([-1, 0, -1])

>>> fsc.has_output and fsc.output_tokens

False

>>> fsc.apply_likelihoods(dummy_obs)

>>> fsc.internal_scores.sum()

1.0

>>> fsc.pass_tokens()

>>> fsc.has_output and fsc.output_tokens

(array([ 0.75]), array([0]))

>>> fsc.apply_likelihoods(dummy_obs)

See that we’ve lost the output mass

>>> sum(fsc.internal_scores)

0.25

Note that a zero-state Hmm makes sense, even with the multiple inputs and outputs used in regular Hmms to implement state skipping. A zero-state Hmm is just an epsilon.

>>> num_states = 0

>>> out_order = 4

>>> dummies = [DummyModel(2, 1.0)]

>>> mm = GmmMgr(dummies)

>>> models = range(num_states)

>>> fs = build_forward_hmm_compact(num_states, mm, models, out_order, tuple([p] * num_states for p in toy_probs(out_order)))

>>> fsc = fs.get_decode_instance()

>>> scores = np.array(toy_probs(out_order - 1))

>>> ids = np.arange(len(scores)) + 100

>>> fsc.activate(scores, ids)

>>> print fsc.token_string

I0 I1 I2 | O0 O1 O2

0.250000 0.625000 0.125000 | 0.000000 0.000000 0.000000

100 101 102 | -1 -1 -1

input_activation_ids_seen: set([100, 101, 102])

output_activation_ids_seen: set([])

current_activation_ids: set([100, 101, 102])

lost_activation_ids: set([])

>>> fsc.pass_tokens()

>>> fsc.traceback

array([-1, -1, -1, 0, 1, 2])

>>> fsc.has_output and fsc.output_tokens

(array([ 0.25 , 0.625, 0.125]), array([100, 101, 102]))

>>> fsc.output_tokens[0].sum()

1.0

>>> fsc.apply_likelihoods(dummy_obs)

>>> len(fsc.internal_scores)

0

>>> fsc.internal_scores.sum()

0.0

>>> print fsc.token_string

I0 I1 I2 | O0 O1 O2

0.000000 0.000000 0.000000 | 0.250000 0.625000 0.125000

-1 -1 -1 | 100 101 102

input_activation_ids_seen: set([100, 101, 102])

output_activation_ids_seen: set([100, 101, 102])

current_activation_ids: set([102, 100, 101])

lost_activation_ids: set([])

>>> fs = Hmm(num_states)

>>> dummies = [DummyModel(2, 1.0)] * 1

>>> mm = GmmMgr(dummies)

>>> models = range(num_states)

============================= End Decode Instance Interface ======================

Bases: object

Bases: object

Add to the incoming alpha values for this model. alphas must be a Numpy array of length self.num_inputs and must be in the working domain. Usually it will be the output of some previous call to process_one_frame_forward.

Add to the incoming beta values for this model. betas must be a Numpy array of length self.num_outputs and it must be in the working domain. Usually it will be the output of some previous call to process_one_frame_backward.

Accumulate an Hmm in standalone mode on one sequence. That is, assume this model is all there is; we’re not feeding to or from another model, so we start with hardwired inputs on both forward and backward passes.

Get the sum of gammas over all states in this context for each frame and add it to the gamma_sum provided.

Initialize accumulators for this HMM if they haven’t already been initialized. Existing accumulators are not affected by this call.

Build an Hmm from models and transition probabilities. gmm_mgr is a GmmMgr instance which holds the underlying models for this HMM; models is an iterable of self.num_states indices into gmm_mgr. num_inputs and num_outputs specify the number of ways into and out of the model. transition_probs is a Numpy array with shape (n,n), where n = num_inputs + self.num_states + num_outputs. Transitions into the (virtual) input states must be zero, transitions out of the (virtual) output states must be zero. If dimension and covariance_type are not None, then models must be empty and the model built will be

Clear accumulators for this HMM.

A dimension of None means we’re an epsilon model, i.e. we have no real states, so we don’t care what the dimensions of observations passed to us is.

Accumlate the results of processing the current sequence.

Display a dot-generated representation of the Hmm. Returns the name of the temporary file that the display tool is working from. The caller is responsible for removing this file.

Optional temp_file_prefix is the prefix used for the filename.

Optional display_tool_format is formatting string, with %s where the filename goes, used to generate the command that will display the file. By default it assumes you’re on a Mac and have Graphviz.app installed in the /Applications directory.

Remaining keyword arguments are handed to the dot_iter function.

Returns a generator that yields lines of text, including newlines. The text represents the Hmm in the DOT language for which there are many displayers. See also dot_display().

Optional argument hmm_label is a string label for the Hmm. Optional argument graph_type defaults to ‘digraph’, can also be ‘graph’ and ‘subgraph’

Optional state_callback is a function that will be called with the index of each state and a string from the set {‘i’, ‘s’, ‘o’} indicating that the state in question is an input state, a real state, or an output state, respectively. The function should return a valid DOT language a_list of attributes for drawing the node. By default, each node will be labelled with the type character followed by the index.

Optional transition_callback is a function that will be called with the index of the source state, the index of the destination state, and the probability of the transition. It should return a valid DOT language a_list of attributes for drawing the transition. By default, each transition will be labelled with its probability.

Optional globals is an iterable that yields strings to put in the globals section of the DOT file.

>>> dummies = ( DummyModel(2, 0.1), DummyModel(2, 0.2), DummyModel(2, 0.4), DummyModel(2, 0.4) )

>>> mm = GmmMgr(dummies)

>>> models = range(4)

>>> hmm = build_forward_hmm_compact(4, mm, models, 3, ((0.25, 0.25, 0.25, 0.4),

... (0.5, 0.5, 0.5, 0.4),

... (0.25, 0.25, 0.25, 0.2)))

>>> hmm2 = build_forward_hmm_exact(4, mm, models, 3, ((0.25, 0.25, 0.25, 0.4),

... (0.5, 0.5, 0.5, 0.4),

... (0.25, 0.25, 0.25, 0.2)))

>>> trans = np.array(((0.0, 1.0, 0.0, 0.0, 0.0, 0.0),

... (0.0, 0.0, 0.5, 0.25, 0.25, 0.0),

... (0.0, 0.0, 0.5, 0.25, 0.0, 0.25),

... (0.0, 0.5, 0.25, 0.25, 0.0, 0.0),

... (0.0, 0.5, 0.25, 0.25, 0.0, 0.0),

... (0.0, 0.0, 0.0, 0.0, 0.0, 0.0)))

>>> hmm3 = build_hmm(4, mm, models, 1, 1, trans)

>>> line_iter, in_names, out_names = hmm.dot_iter()

>>> print in_names

('state0', 'state1')

>>> print out_names

('state6', 'state7')

>>> print ''.join(line_iter)

digraph {

rankdir=LR;

state0 [label="i0", style=bold, shape=circle, fixedsize=true, height=0.4, color=green];

state1 [label="i1", style=bold, shape=circle, fixedsize=true, height=0.4, color=green];

state2 [label="s0", style=bold];

state3 [label="s1", style=bold];

state4 [label="s2", style=bold];

state5 [label="s3", style=bold];

state6 [label="o0", style=bold, shape=circle, fixedsize=true, height=0.4, color=red];

state7 [label="o1", style=bold, shape=circle, fixedsize=true, height=0.4, color=red];

state0 -> state2 [label="1.0"];

state1 -> state3 [label="1.0"];

state2 -> state2 [label="0.25"];

state2 -> state3 [label="0.5"];

state2 -> state4 [label="0.25"];

state3 -> state3 [label="0.25"];

state3 -> state4 [label="0.5"];

state3 -> state5 [label="0.25"];

state4 -> state4 [label="0.25"];

state4 -> state5 [label="0.5"];

state4 -> state6 [label="0.25"];

state5 -> state5 [label="0.4"];

state5 -> state6 [label="0.4"];

state5 -> state7 [label="0.2"];

}

<BLANKLINE>

>>> line_iter, in_names, out_names = hmm.dot_iter(hmm_label='Foo', state_callback=lambda i,gi,t: 'label="%s%d", style=bold' % (t,i), globals=('rankdir=LR;',))

>>> print ''.join(line_iter)

digraph Foo {

rankdir=LR;

rankdir=LR;

state0 [label="i0", style=bold];

state1 [label="i1", style=bold];

state2 [label="s0", style=bold];

state3 [label="s1", style=bold];

state4 [label="s2", style=bold];

state5 [label="s3", style=bold];

state6 [label="o0", style=bold];

state7 [label="o1", style=bold];

state0 -> state2 [label="1.0"];

state1 -> state3 [label="1.0"];

state2 -> state2 [label="0.25"];

state2 -> state3 [label="0.5"];

state2 -> state4 [label="0.25"];

state3 -> state3 [label="0.25"];

state3 -> state4 [label="0.5"];

state3 -> state5 [label="0.25"];

state4 -> state4 [label="0.25"];

state4 -> state5 [label="0.5"];

state4 -> state6 [label="0.25"];

state5 -> state5 [label="0.4"];

state5 -> state6 [label="0.4"];

state5 -> state7 [label="0.2"];

}

<BLANKLINE>

>>> # hmm.dot_display()

>>> # hmm.dot_display(hmm_label='FourStateForward',

# ... globals=('label="Compact Four-state HMM with 1 node skipping"',

# ... 'labelloc=top'))

>>> # hmm2.dot_display(hmm_label='FourStateForward',

# ... globals=('label="Exact Four-state HMM with 1 node skipping"',

# ... 'labelloc=top'))

>>> # hmm3.dot_display()

Apply transition accumulators if they have anything in them. This function will clear existing accumulators, so it may safely be called more than one time.

Find the expected duration in each state for a given frame_count. Includes an expected duration for each virtual ‘exit’ state. If verbose is True, print per-frame occupancy probabilities for each state.

Find the state-level Viterbi alignment for the given sequence assuming it reaches some exit state on the last frame. sequence should be a sequence of datapoints in the form of Numpy arrays. If start_state is not None, it should be the index of the virtual start state from which to begin the alignment. If start_state is None, equal weighting is used if there are multiple virtual input states. The return is a triple of Numpy arrays with shape (n+1,) where n is the number of frames in sequence. The first element is an array of state indicies . Each entry but the last will be between 0 and hmm.num_states - 1 indicating a ‘real’ HMM state. The last entry will be between hmm_num_states and hmm.num_states + hmm.num_outputs - 1 indicating the most likely exit state for the sequence. The second element is an array of scores for the model in the most-likely state for each frame; the score for the n+1st frame will be 0. The third element is an array of transition probabilities between the most likely states. If start_state is None, the first transition probability will be 0.0.

Score a sequence of datapoints.

Get a decoding instance for this Hmm.

Decoding state is managed by an HmmDecodeInstance. This allows a given Hmm be used for decoding at different parts of a decoding network. Each place in the network has its own instance of an HmmDecodeInstance to handle the details of the likelihoods at that place in the network. user_data may be anything at all; it’s just a place to store whatever per-instance information a decoder needs to have and will never be modified or interpreted by the instance. See HmmDecodeInstance.

Initialize this model for a backward BW pass on the sequence. Note that scoring is not done in this call, only in init_for_forward_pass, so be sure to do forward passes before backward passes.

Initialize this model for a forward BW pass on the sequence. Note that scoring is done in this call, but not in init_for_backward_pass, so be sure to do forward passes before backward passes.

>>> num_states = 3

>>> dummies = ( DummyModel(2, 0.1), DummyModel(2, 0.2), DummyModel(2, 0.4), DummyModel(2, 0.4) )

>>> mm = GmmMgr(dummies)

>>> models = range(num_states)

>>> hmm1 = build_forward_hmm_compact(num_states, mm, models, 2, ((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)))

>>> f = cStringIO.StringIO()

>>> cPickle.dump(hmm1, f, cPickle.HIGHEST_PROTOCOL)

>>> f.seek(0)

>>> hmm2 = cPickle.load(f)

>>> hmm2.init_from_pickle(mm)

True if there is a path through the Hmm that doesn’t require an observation, False otherwise

Do one frame of backward processing and return the output beta values. Resets the input beta values in context to 0 for the next frame. This function should be called one time more than the length of sequence passed to init_for_forward_pass; the return from the first call will be None.

Do one frame of backward processing and return the output beta values. Resets the input beta values in context to 0 for the next frame. This function should be called one time more than the length of sequence passed to init_for_forward_pass; the return from the first call will be None.

Do one frame of forward processing and return the output alpha values. Resets the input alpha values in context to 0 for the next frame. This function should be called one time more than the length of sequence passed to init_for_forward_pass; the return from that final call will be None.

Sample an Hmm

Returns a tuple of samples from the states of the Hmm; each sample will be a pair (state_index, obs) where state_index is the 0-based index of the real state used to sample and obs is the sampled observation. Note that this will be of no particular length unless the transition matrix of the Hmm is deterministic in the sense that a certain number of transitions is guaranteed. If provided, start_state_dist is a distribution over the input state of the Hmm (so it must sum to 1). If not provided, a uniform distribution over input states will be used

Train a model on a set of data sequences

data should be an iterable of data sequences; each sequence should contain Numpy arrays representing the datapoints. Training is done in standalone mode.

Bases: object

A structure for holding the information for doing a Viterbi decode with an HMM.

Examples:

Create an Hmm with single-state skipping and observation likelihoods of one

>>> dummies = tuple(repeat(DummyModel(2, 1.0), 5))

>>> mm = GmmMgr(dummies)

>>> models = range(5)

>>> hmm0 = build_forward_hmm_compact(5, mm, models, 3, ((0.0625,) * 5, (0.75,) * 5, (0.1875,) * 5))

Get a decoding instance for this HMM

>>> hdc0 = hmm0.get_decode_instance('A')

>>> hdc0.hmm is hmm0

True

>>> hdc0.user_data == 'A'

True

Inject some mass. Note total injected mass sums to one

>>> scores = np.array((0.25, 0.75))

>>> ids = np.array((25, 75))

>>> hdc0.activate(scores, ids)

Use the token_string property to see a reader-friendly snapshot of the current state of the instance:

>>> print hdc0.token_string

I0 I1 | S0 S1 S2 S3 S4 | O0 O1

0.250000 0.750000 | 0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000

25 75 | -1 -1 -1 -1 -1 | -1 -1

input_activation_ids_seen: set([25, 75])

output_activation_ids_seen: set([])

current_activation_ids: set([25, 75])

lost_activation_ids: set([])

Note that -1 is used as a sentinel value for activation ids which have not been set yet. This value is also available as HmmDecodeInstance.NO_ID.

Don’t access properties when the instance isn’t in the right state - see individual properties for these restrictions.

>>> hdc0.internal_scores

Traceback (most recent call last):

...

ValueError: Operation internal_scores requires HmmDecodeInstance in phase apply_likelihoods but phase is activate

Don’t construct these for yourself - use the factory function in the Hmm class instead.

Add mass to this sequence’s inputs. scores and activation_ids should both be Numpy arrays with shape (hmm.num_inputs,). The first array represents the scores to put on each input; the second represents the best activation id for each input.

Scale state likelihoods by the observation likelihood and multiply by the normalization.

Scale state likelihoods by the observation likelihood and multiply by the normalization.

The set of all activation ids that are currently active, including those on both inputs and outputs. The set is updated by calls to both activate(), which might add some new elements, and to pass_tokens(), which might remove some elements. It may be accessed any time.

True if any output is active, that is if it has any probability mass. This property can only be accessed after a call to pass_tokens().

The set of all activation ids that have ever been seen by this instance. The set is updated by calls to activate(), but may be accessed any time.

The likelihood scores for the internal states, that is, excluding inputs and outputs. This property can only be accessed after a call to apply_likelihoods().

The set of all activation ids that have been seen by this instance, but which have never appeared on an output state and are not current in this instance. This property is always equivalent to the set input_activation_ids_seen - (output_activation_ids_seen | current_activation_ids). The set is updated by calls to both activate(), which might remove some elements, and to pass_tokens(), which might add some new elements. It may be accessed any time.

Note

Calling activate() can remove an element from this set if it had been seen before and was previously lost, but is reactivated. Thus an activation id can move from being lost to being current. But if an activation id reaches the outputs, it will never become lost.

The best internal likelihood score. This property can only be accessed after a call to apply_likelihoods().

The best output likelihood scores. This property can only be accessed after a call to pass_tokens().

The worst internal likelihood score that is not zero. This property can only be accessed after a call to apply_likelihoods().

It is an error to call this if max_internal_score is zero.

The set of all activation ids that have ever appeared on an output state in this instance. The set is updated by calls to pass_tokens(), but may be accessed any time.

The output scores and activation ids for activation of successors. This property is a pair of Numpy arrays. This property can only be accessed after a call to pass_tokens().

The output scores and activation ids for activation of successors; must be called immediately after apply_likelihoods() has been called.

Move tokens (scores and activations) along transitions.

A string displaying the decoding instance in an easy-to-read fashion. This property is meant for diagnostic purposes and can be accessed any time.

The likelihood scores and activation ids of all states, including inputs and outputs, as a pair of Numpy arrays. This property is meant for diagnostic purposes and can be accessed any time.

The index of the best predecessor for each state in the HMM. A value of -1 indicates no predecessor (because the likelihood of the state is 0). This property can only be accessed after a call to pass_tokens().

Bases: object

An abstract scoring object interface.

Build a forward-transitioning Hmm from models and transition probabilities. Models must be as long as num_states and must contain valid indicies for gmm_mgr. transition_order must be at least 2, which corresponds to a Bakis model (self-loops and 1-forward arcs). transition_prob_sequences must have transition_order elements, each if which is a sequence of transition probabilities of length num_states. The first sequence is the self-loop probs, the second is the 1-forward probs, and so on. The model built is compact in the sense that multiple input and output transitions are allowed to ‘share’ a common virtual input or output, so the number of virtual input/output nodes is linear in the order. When used in higher-level graph structures (like UttLattice), models of this type result in training which approximates, but does not capture exactly, the results of using a single standalone model.

>>> dummies = ( DummyModel(2, 0.1), DummyModel(2, 0.2), DummyModel(2, 0.4), DummyModel(2, 0.4) )

>>> mm = GmmMgr(dummies)

>>> num_states = 3

>>> models = range(num_states)

>>> hmm1 = build_forward_hmm_compact(num_states, mm, models, 2, ((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)))

>>> hmm2_log = build_forward_hmm_compact(num_states, mm, models, 2, ((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)))

>>> hmm3 = build_forward_hmm_compact(num_states, mm, models, 2, ((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)))

>>> hmm1 == hmm3

True

Build a forward-transitioning Hmm from models and transition probabilities. Models must be as long as num_states and must contain valid indicies for gmm_mgr. Transition order must be at least 2, which corresponds to a Bakis model (self-loops and 1-forward arcs). transition_prob_sequences must have transition-order elements, each if which is a sequence of transition probabilities of length num_states. The first sequence is the self-loop probs, the second is the 1-forward probs, and so on. The model built is exact in the sense that input and output transitions are not allowed to share a common virtual input or output, so the number of virtual input/output nodes is quadratic in the order. When used in higher-level graph structures (like UttLattice), models of this type result in training which exactly captures the results of using a single standalone model.

>>> dummies = ( DummyModel(2, 0.1), DummyModel(2, 0.2), DummyModel(2, 0.4), DummyModel(2, 0.4) )

>>> mm = GmmMgr(dummies)

>>> num_states = 3

>>> models = range(num_states)

>>> hmm1 = build_forward_hmm_exact(num_states, mm, models, 2, ((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)))

>>> hmm2_log = build_forward_hmm_exact(num_states, mm, models, 2, ((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)))

>>> hmm3 = build_forward_hmm_exact(num_states, mm, models, 2, ((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)))

>>> hmm1 == hmm3

True

Build an Hmm from models and transition probabilities. gmm_mgr is a GmmMgr instance which holds the underlying models for this HMM; models is an iterable of num_states indices into gmm_mgr. num_inputs and num_outputs specify the number of ways into and out of the model. transition_probs is a Numpy array with shape (n,n), where n = num_inputs + num_states + num_outputs. Transitions into the (virtual) input states must be zero, transitions out of the (virtual) output states must be zero.

>>> dummies = ( DummyModel(2, 0.1), DummyModel(2, 0.2), DummyModel(2, 0.4), DummyModel(2, 0.4) )

>>> mm = GmmMgr(dummies)

>>> trans = np.array(((0.0, 1.0, 0.0),

... (0.0, 0.999999999999, 0.000000000001),

... (0.0, 0.0, 0.0)))

>>> hmm0 = build_hmm(num_states=1, gmm_mgr=mm, models=(0,), num_inputs=1, num_outputs=1, transition_probs=trans)

A regular, non-trivial, acyclic matrix for testing

>>> hmm = build_multipath_trans()

Test that reveals details of score and activation ID propogation using build_multipath_trans().

>>> num_states, num_inputs, num_outputs, trans = build_multipath_trans()

>>> dummies = tuple(DummyModel(1, 1) for _ in xrange(num_states))

>>> mm = GmmMgr(dummies)

>>> models = (0,) * num_states

>>> hmm = build_hmm(num_states=num_states, gmm_mgr=mm, models=models, num_inputs=num_inputs, num_outputs=num_outputs, transition_probs=trans)

>>> decode_hmm = HmmDecodeInstance(hmm)

>>> vals = np.fromiter((1<<x for x in xrange(num_inputs)), dtype=int)

>>> act_ids = np.arange(num_inputs, dtype=int)

>>> decode_hmm.activate(vals, act_ids)

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

1.000000 2.000000 4.000000 8.000000 16.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000

0 1 2 3 4 | -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 | -1 -1 -1 -1 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([])

current_activation_ids: set([0, 1, 2, 3, 4])

lost_activation_ids: set([])

>>> decode_hmm.pass_tokens()

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 1.000000 4.000000 4.000000 8.000000 0.000000 0.000000 0.000000 8.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000

-1 -1 -1 -1 -1 | 0 2 3 4 -1 -1 -1 4 -1 -1 -1 -1 | -1 -1 -1 -1 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([])

current_activation_ids: set([0, 2, 3, 4])

lost_activation_ids: set([1])

>>> prev = map(tuple, decode_hmm.tokens)

>>> decode_hmm.apply_likelihoods((1,))

>>> assert map(tuple, decode_hmm.tokens) == prev

>>> decode_hmm.pass_output_tokens

(array([ 0., 0., 0., 0., 8.]), array([-1, -1, -1, -1, 4]))

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 1.000000 4.000000 4.000000 8.000000 0.000000 0.000000 0.000000 8.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000

-1 -1 -1 -1 -1 | 0 2 3 4 -1 -1 -1 4 -1 -1 -1 -1 | -1 -1 -1 -1 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([])

current_activation_ids: set([0, 2, 3, 4])

lost_activation_ids: set([1])

>>> decode_hmm.pass_tokens()

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 1.000000 4.000000 4.000000 0.000000 0.000000 0.000000 0.000000 8.000000 | 0.000000 0.000000 0.000000 0.000000 8.000000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 0 2 3 -1 -1 -1 -1 4 | -1 -1 -1 -1 4

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([4])

current_activation_ids: set([0, 2, 3, 4])

lost_activation_ids: set([1])

>>> prev = map(tuple, decode_hmm.tokens)

>>> decode_hmm.apply_likelihoods((1,))

>>> assert map(tuple, decode_hmm.tokens) == prev

>>> decode_hmm.pass_output_tokens

(array([ 0., 0., 0., 4., 4.]), array([-1, -1, -1, 4, 4]))

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 1.000000 4.000000 4.000000 0.000000 0.000000 0.000000 0.000000 8.000000 | 0.000000 0.000000 0.000000 0.000000 8.000000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 0 2 3 -1 -1 -1 -1 4 | -1 -1 -1 -1 4

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([4])

current_activation_ids: set([0, 2, 3, 4])

lost_activation_ids: set([1])

>>> decode_hmm.pass_tokens()

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 1.000000 4.000000 4.000000 0.000000 | 0.000000 0.000000 0.000000 4.000000 4.000000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 -1 -1 -1 -1 0 2 3 -1 | -1 -1 -1 4 4

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([4])

current_activation_ids: set([0, 2, 3, 4])

lost_activation_ids: set([1])

>>> prev = map(tuple, decode_hmm.tokens)

>>> decode_hmm.apply_likelihoods((1,))

>>> assert map(tuple, decode_hmm.tokens) == prev

>>> decode_hmm.pass_output_tokens

(array([ 1., 2., 2., 4., 0.]), array([ 0, 2, 2, 3, -1]))

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 1.000000 4.000000 4.000000 0.000000 | 0.000000 0.000000 0.000000 4.000000 4.000000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 -1 -1 -1 -1 0 2 3 -1 | -1 -1 -1 4 4

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([4])

current_activation_ids: set([0, 2, 3, 4])

lost_activation_ids: set([1])

>>> decode_hmm.pass_tokens()

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 | 1.000000 2.000000 2.000000 4.000000 0.000000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 | 0 2 2 3 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 2, 3, 4])

current_activation_ids: set([0, 2, 3])

lost_activation_ids: set([1])

>>> prev = map(tuple, decode_hmm.tokens)

>>> decode_hmm.apply_likelihoods((1,))

>>> assert map(tuple, decode_hmm.tokens) == prev

>>> decode_hmm.pass_output_tokens

(array([ 0., 0., 0., 0., 0.]), array([-1, -1, -1, -1, -1]))

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 | 1.000000 2.000000 2.000000 4.000000 0.000000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 | 0 2 2 3 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 2, 3, 4])

current_activation_ids: set([0, 2, 3])

lost_activation_ids: set([1])

>>> decode_hmm.pass_tokens()

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 | -1 -1 -1 -1 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 2, 3, 4])

current_activation_ids: set([])

lost_activation_ids: set([1])

>>> prev = map(tuple, decode_hmm.tokens)

>>> decode_hmm.apply_likelihoods((1,))

>>> assert map(tuple, decode_hmm.tokens) == prev

>>> decode_hmm.pass_output_tokens

(array([ 0., 0., 0., 0., 0.]), array([-1, -1, -1, -1, -1]))

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 | -1 -1 -1 -1 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 2, 3, 4])

current_activation_ids: set([])

lost_activation_ids: set([1])

>>> decode_hmm.activate(vals[::-1], act_ids[::-1])

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

16.000000 8.000000 4.000000 2.000000 1.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000

4 3 2 1 0 | -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 | -1 -1 -1 -1 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 2, 3, 4])

current_activation_ids: set([0, 1, 2, 3, 4])

lost_activation_ids: set([])

>>> decode_hmm.pass_tokens()

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 16.000000 8.000000 1.000000 1.000000 0.000000 0.000000 0.000000 0.500000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000

-1 -1 -1 -1 -1 | 4 3 1 1 -1 -1 -1 0 -1 -1 -1 -1 | -1 -1 -1 -1 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 2, 3, 4])

current_activation_ids: set([0, 1, 3, 4])

lost_activation_ids: set([])

>>> prev = map(tuple, decode_hmm.tokens)

>>> decode_hmm.apply_likelihoods((1,))

>>> assert map(tuple, decode_hmm.tokens) == prev

>>> decode_hmm.pass_output_tokens

(array([ 0. , 0. , 0. , 0. , 0.5]), array([-1, -1, -1, -1, 0]))

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 16.000000 8.000000 1.000000 1.000000 0.000000 0.000000 0.000000 0.500000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000

-1 -1 -1 -1 -1 | 4 3 1 1 -1 -1 -1 0 -1 -1 -1 -1 | -1 -1 -1 -1 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 2, 3, 4])

current_activation_ids: set([0, 1, 3, 4])

lost_activation_ids: set([])

>>> decode_hmm.pass_tokens()

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 16.000000 8.000000 1.000000 0.000000 0.000000 0.000000 0.000000 1.000000 | 0.000000 0.000000 0.000000 0.000000 0.500000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 4 3 1 -1 -1 -1 -1 1 | -1 -1 -1 -1 0

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 2, 3, 4])

current_activation_ids: set([0, 1, 3, 4])

lost_activation_ids: set([])

>>> prev = map(tuple, decode_hmm.tokens)

>>> decode_hmm.apply_likelihoods((1,))

>>> assert map(tuple, decode_hmm.tokens) == prev

>>> decode_hmm.pass_output_tokens

(array([ 0. , 0. , 0. , 0.5, 0.5]), array([-1, -1, -1, 1, 1]))

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 16.000000 8.000000 1.000000 0.000000 0.000000 0.000000 0.000000 1.000000 | 0.000000 0.000000 0.000000 0.000000 0.500000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 4 3 1 -1 -1 -1 -1 1 | -1 -1 -1 -1 0

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 2, 3, 4])

current_activation_ids: set([0, 1, 3, 4])

lost_activation_ids: set([])

>>> decode_hmm.pass_tokens()

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 16.000000 8.000000 1.000000 0.000000 | 0.000000 0.000000 0.000000 0.500000 0.500000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 -1 -1 -1 -1 4 3 1 -1 | -1 -1 -1 1 1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 1, 2, 3, 4])

current_activation_ids: set([1, 3, 4])

lost_activation_ids: set([])

>>> prev = map(tuple, decode_hmm.tokens)

>>> decode_hmm.apply_likelihoods((1,))

>>> assert map(tuple, decode_hmm.tokens) == prev

>>> decode_hmm.pass_output_tokens

(array([ 16., 4., 4., 1., 0.]), array([ 4, 3, 3, 1, -1]))

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 16.000000 8.000000 1.000000 0.000000 | 0.000000 0.000000 0.000000 0.500000 0.500000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 -1 -1 -1 -1 4 3 1 -1 | -1 -1 -1 1 1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 1, 2, 3, 4])

current_activation_ids: set([1, 3, 4])

lost_activation_ids: set([])

>>> decode_hmm.pass_tokens()

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 | 16.000000 4.000000 4.000000 1.000000 0.000000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 | 4 3 3 1 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 1, 2, 3, 4])

current_activation_ids: set([1, 3, 4])

lost_activation_ids: set([])

>>> prev = map(tuple, decode_hmm.tokens)

>>> decode_hmm.apply_likelihoods((1,))

>>> assert map(tuple, decode_hmm.tokens) == prev

>>> decode_hmm.pass_output_tokens

(array([ 0., 0., 0., 0., 0.]), array([-1, -1, -1, -1, -1]))

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 | 16.000000 4.000000 4.000000 1.000000 0.000000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 | 4 3 3 1 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 1, 2, 3, 4])

current_activation_ids: set([1, 3, 4])

lost_activation_ids: set([])

>>> decode_hmm.pass_tokens()

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 | -1 -1 -1 -1 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 1, 2, 3, 4])

current_activation_ids: set([])

lost_activation_ids: set([])

>>> prev = map(tuple, decode_hmm.tokens)

>>> decode_hmm.apply_likelihoods((1,))

>>> assert map(tuple, decode_hmm.tokens) == prev

>>> decode_hmm.pass_output_tokens

(array([ 0., 0., 0., 0., 0.]), array([-1, -1, -1, -1, -1]))

>>> print decode_hmm.token_string

I0 I1 I2 I3 I4 | S0 S1 S2 S3 S4 S5 S6 S7 S8 S9 S10 S11 | O0 O1 O2 O3 O4

0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 | 0.000000 0.000000 0.000000 0.000000 0.000000

-1 -1 -1 -1 -1 | -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 | -1 -1 -1 -1 -1

input_activation_ids_seen: set([0, 1, 2, 3, 4])

output_activation_ids_seen: set([0, 1, 2, 3, 4])

current_activation_ids: set([])

lost_activation_ids: set([])

Create a toy distribution of out-arc weights given a count of out arcs. Make forward-one arc get the most weight. - 1/4 to self loop - 1/2 + eps to forward one - 1/8 to forward two - 1/16 to forward three - etc - 1/(2^n) to forward n-1 - eps = 1/(2^n)

>>> toy_probs(5)

(0.25, 0.53125, 0.125, 0.0625, 0.03125)

>>> toy_probs(1)

(1.0,)